Automatically Countering Preenrollment Bias

TL;DR: Nimbus has the capability to adjust metrics to account for preenrollment bias/natural randomization variability and to improve the precision of inferences, when possible. Currently this is configured by default for guardrails (averages only) but can also be used for custom analyses. This is expected to reduce the frequency of false positives, of which we believe many were caused by natural randomization variability.

Preenrollment Bias

In order to generate evidence for a causal hypothesis, we must guarantee that all sources of confounding are accounted for. We can either do this by manually controlling for all confounders (which is quite difficult), or we can use randomized experiments in which the randomization process guarantees that, on average, units in each treatment branch are balanced on all confounders.

Randomization provides a guarantee of balance on average and across large numbers of experiments, but in practice, for any given experiment and confounding dimension there is the possibility of imbalance. This imbalance (or rather, confounding) presents a challenge to our goal of gathering causal evidence. An imbalance observed during the treatment period is indistinguishable from a treatment effect. In the next section (Retrospective A/A Tests) we provide a method for detecting these situations.

Retrospective A/A tests

User behavior tends to be consistent over time. We've found week-to-week correlations of up to 80% for our key guardrail metrics. Given this strong correlation, we can look for evidence of imbalance during the pre-experiment period. We've been using the term preenrollment bias, though others use pre-experiment bias.

During the pre-experiment period, our experimental cohorts should have no statistically significant difference across the dimensions (metrics) of interest. If there are statistically significant differences during the pre-experiment period, this is evidence of bias. This technique is called a Retrospective A/A test.

We now automatically run Retrospective A/A tests for all Nimbus experiments to test for imbalance in guardrail metrics. These can be found alongside the other statistical results in the Jetstream data products.

In short, you can find them in the moz-fx-data-experiments.mozanalysis.statistics_<slug>_<period>_1 where <slug> is the (snake case) experiment slug or ID (can be found using the Experimenter UI). We run analyses over 2 periods: the week prior to enrollment (<period> = preenrollment_week) and the 28-day period prior (<period> = preenrollment_days_28).

For example:

SELECT *

FROM `moz-fx-data-experiments.mozanalysis.statistics_fake_experiment_slug_preenrollment_week_1`

WHERE 1=1

AND comparison = 'relative_uplift'

AND comparison_to_branch = 'control'

AND statistic != 'deciles'

AND analysis_basis = 'exposures'

ORDER BY metric, branch, statistic

Covariate Adjustment & CUPED

What if the Retrospective A/A test flags evidence of an imbalance? Should we have to discard that metric from our analysis completely? Luckily, there exist techniques to adjust for pre-experiment information. The most common/popular of these is CUPED. We have implemented a CUPED-like technique using linear models.

Inferences for the average treatment effect (ATE) are most commonly calculated by computing the average (mean) in each treatment branch and then computing the difference. However, this can also be calculated using linear models.

As an example, we can fit a model of the form:

where is the treatment indicator (0 if control, 1 if treated) for the -th unit. Inferences on the ATE can be found from the parameter. The point estimate and confidence interval will be identical (ref) to the point estimate and confidence interval of the absolute difference in means between the branches. Computing the confidence intervals for the relative differences are more complex, but can be done using post-estimation marginal effects (ref).

Using this framework, it's quite simple to extend our experiment analysis to account for pre-experiment data. We can simply include it as a covariate in the model. We can instead estimate:

Where is the metric of interest () for the -th unit as measured during the pre-experiment period. As before, we're interested in inferences to , but now these inferences will be:

- Adjusted to account for pre-experiment information

- Benefit from an increase in precision.

Configuring covariate adjustment

Adjusting a new metric for preenrollment bias

To perform adjustment for a new metric, you can write/edit the custom config to do 2 things: 1) configure your metric to be calculated over the preenrollment period (that is, perform the retrospective A/A test) and 2) configure the adjustment.

To ensure that your metric is computed during the pre-enrollment period, simply add it to the desired period metric list:

preenrollment_weekly = [

'my_new_metric'

]

To configure the adjustment, first designate that inferences on the mean are desired using linear models. Then, configure that statistic to adjust based on the period chosen above. For example:

[metrics.my_new_metric.statistics.linear_model_mean] # desire to estimate the mean using linear models

[metrics.my_new_metric.statistics.linear_model_mean.covariate_adjustment] # desire to adjust that estimate

period = "preenrollment_week" # adjust using the same metric calculated during the week prior to enrollment

One can reference how adjustment is configured for guardrails (example).

Currently, the custom configs only support adjusting a during-treatment metric using the pre-experiment version of that metric. It's not supported to adjust a metric using a different metric or by using during-experiment data. To accomplish either of those tasks, you'll need to do so manually.

As of February 2025, the execution order of analysis periods is not guaranteed. This means that, when rerunning an analysis for an experiment, it's possible for the computation for the during-treatment analysis to execute before the preenrollment has finished. This will result in the adjustment not being performed. That is, Jetstream will automatically fall back to unadjusted inferences. You can determine if Jetstream fell back by either examining the logs (see dashboard) or by comparing to the unadjusted confidence intervals (which will be identical if adjustment was not performed).

Custom adjustments

We have built tooling to perform these calculations and these methods can be used manually by data scientists to perform custom adjusted inferences. For example, suppose one wanted to control for machine type (cores) in an experiment trying to drive performance.

from mozanalysis.statistics.frequentist_stats.linear_models import compare_branches_lm

ref_branch = 'control'

df = ... # one row per experimental unit, with `branch`, `performance`, and `cores` as columns

output = compare_branches_lm(df, 'performance', covariate_col_label='cores')

To dig even deeper or for something more custom, we expose our own linear model class (MozOLS) which is optimized for analyzing experimental data as well as functions to extract absolute and relative confidence intervals (usage example).

HERE BE DRAGONS. It's possible to accidentally leak during-experiment information when performing these custom analysis thus potentially invalidating any causal evidence. Similarly, confidence intervals may be incorrect or misleading for complex adjustments.

Impact and Effectiveness

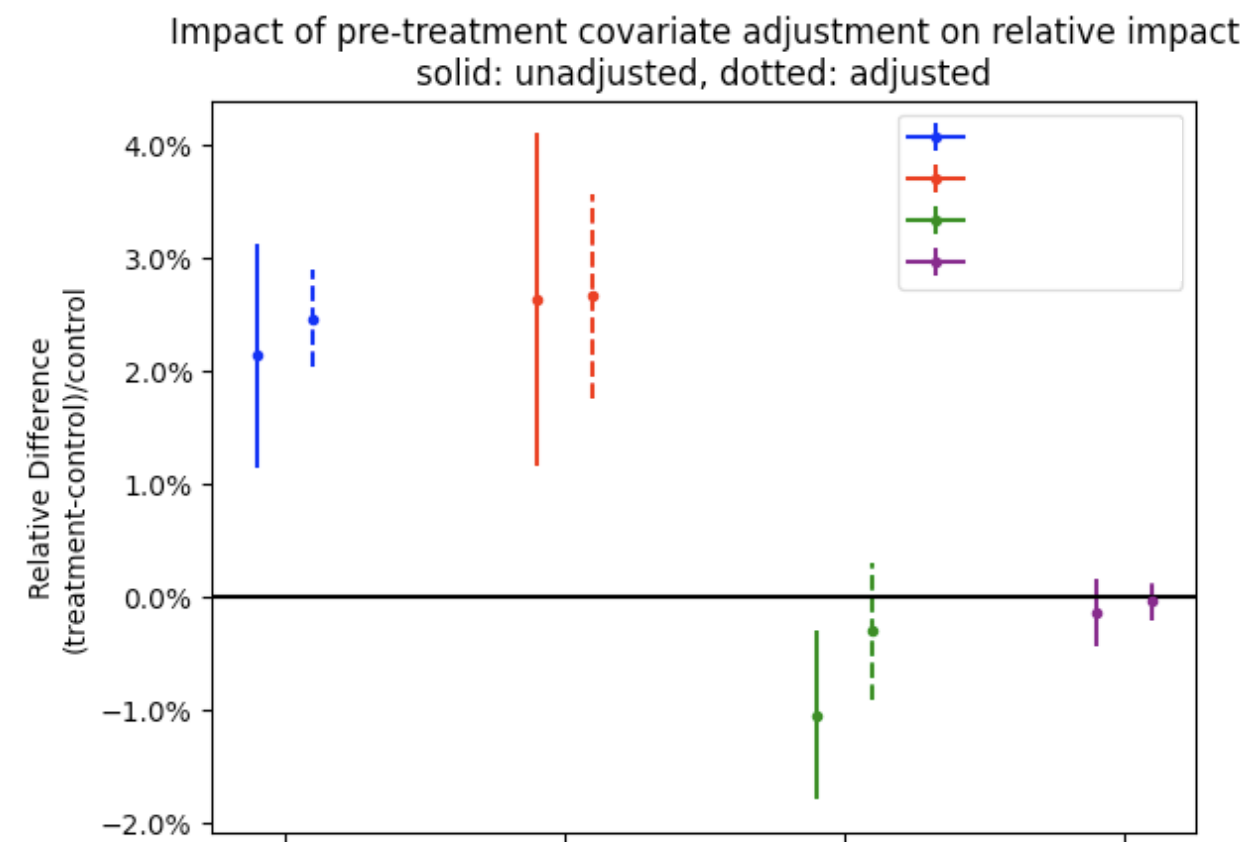

The effectiveness of the correction varies with the design of the experiement. For example, onboarding experiments do not have pre-experiment data and as such no adjustment can be made. Adjustments are most effective when user behavior has strong temporal correlations. See here for an internal summary of the effectiveness of this methodology, but as a quick primer: we can see from the below experiment that adjusted inferences (dotted line) are more precise and powerful and can remove spurious false positives (green metric)