Rollouts

Want more info on rollouts?

Reach out to us in #ask-experimenter on Slack.

Rollouts are single-branch experiments that differ from a traditional experiment in a number of ways:

- A rollout only has a single branch.

- A client can be enrolled in both a single experiment AND rollout for a given feature.

- The experiment feature value takes precedence over the rollout feature value.

- Rollouts use a separate bucketing namespace from experiments so you don't need to worry about the populations colliding.

- Rollouts are not measurement tools. There is no comparison branch so no way to tell "did rollout change ____?"

What is a rollout?

When should I use a rollout instead of an experiment?

- Launching a winning branch of an experiment faster than the trains.

- Launching a configuration to non-experiment users during an experiment after a short period of verification.

- Configuring different settings for a feature for different audiences remotely.

- A "kill switch" if you want to launch a feature but then turn it off if something goes wrong.

When should I not use a rollout?

If the feature has not yet been extensively tested, isn't production quality, or needs a period of validation on the trains.

Can I run a Nimbus experiment and a rollout simultaneously?

It's possible, but bear in mind that rollouts are not measurement instruments. Experiments are.

If you have uncertainty about the effect of the feature, you may wish to be guided by experiment results instead of deploying the feature immediately.

Before you do this, you should consider:

- Future experimentation needs

- Once you deploy the feature to someone, you lose the ability to observe what happens when you introduce that feature to that user.

- Consider whether you have a need for holdbacks.

- Decision criteria

- Identify the risks you're trying to mitigate with a rollout and decide whether you need multiple stages or not.

- If you have multiple stages, how will you know whether to advance or roll back?

- What signals will help you make your decision? Where will they come from?

- If you are relying on the experiment to guide you, make sure that the timelines are compatible.

- Rollouts are not measurement tools. There is NO CONTROL to compare to. There is no results page generated. Rollouts you are potentially manually watching for bugs or user sentiment issues or observing telemetry directly. There is no data science support for rollouts. There is an OpMon dashboard - which that provides raw data without comparison.

You would need to:

- Launch an experiment that targets a fixed portion of the population (sized appropriately for whatever you are trying to measure)

- When you are ready, launch a rollout using the steps below at a low percentage of the population

- As the rollout proceeds, consult your decision criteria. Change the percentage of the rollout by editing the population percentage.

Keep in mind that if you do plan to release the experience to 100% of users, you should make sure it meets production quality standards.

Are there typical rollout patterns I should follow?

This document covers common patterns for people using a rollout to mitigate risk around technical issues, brand perception, and load capacity/service scaling.

How do I create a rollout in Experimenter?

There are two ways to make a rollout from the Experimenter UI. When I have a “winning branch” from an in-flight experiment, I want to easily set some percentage of the non-experiment population to that feature configuration.

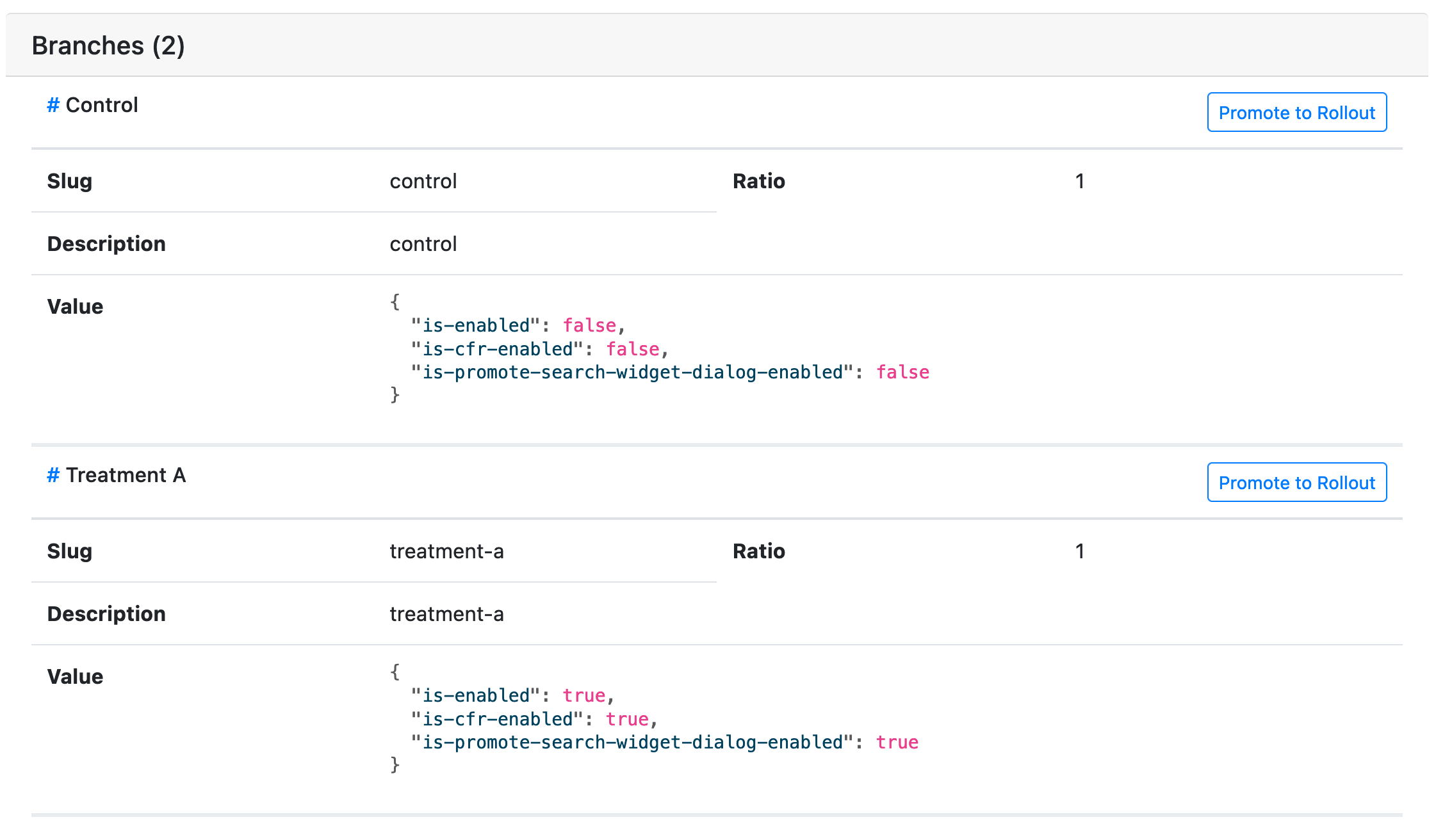

- To create a rollout from an existing experiment, a branch can be cloned and a new rollout created (similar to the way "Clone" works for experiments). This is done using the "Promote to Rollout" buttons on the Summary page of an experiment:

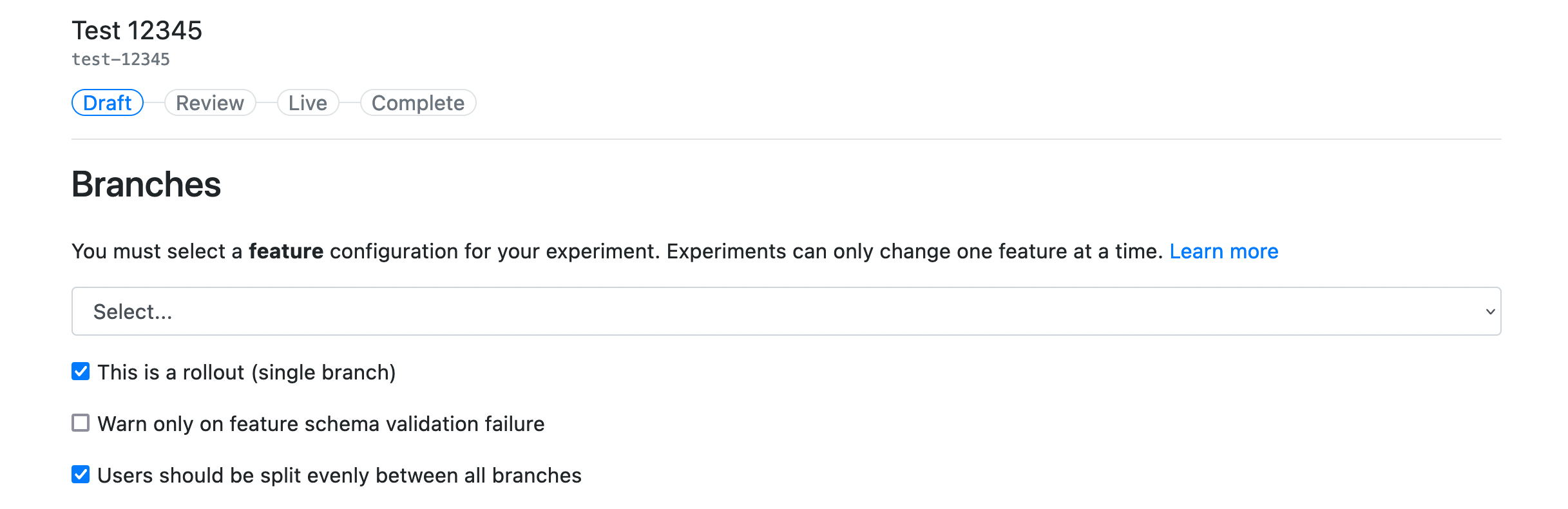

- A rollout can also be manually created (without cloning) through the "Create new" button on the Experimenter home screen. To mark the new item as a rollout, check the "This is a rollout" box on the "Branches" page:

Incrementing your rollout's population percentage

Live editability

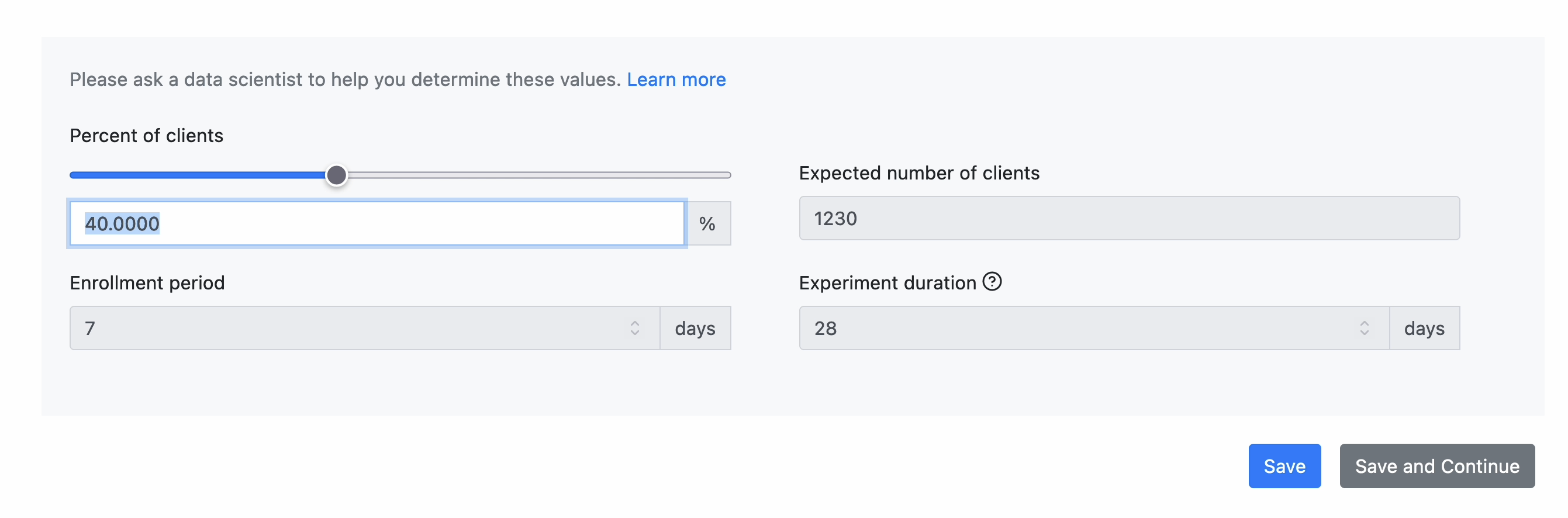

The population percent of a rollout ("Percentage of clients" on the Audience page) can be edited once a rollout is launched. To make changes to this field, open your rollout, and from the left sidebar click on the Audience page. From there, you will be able to make edits and save.

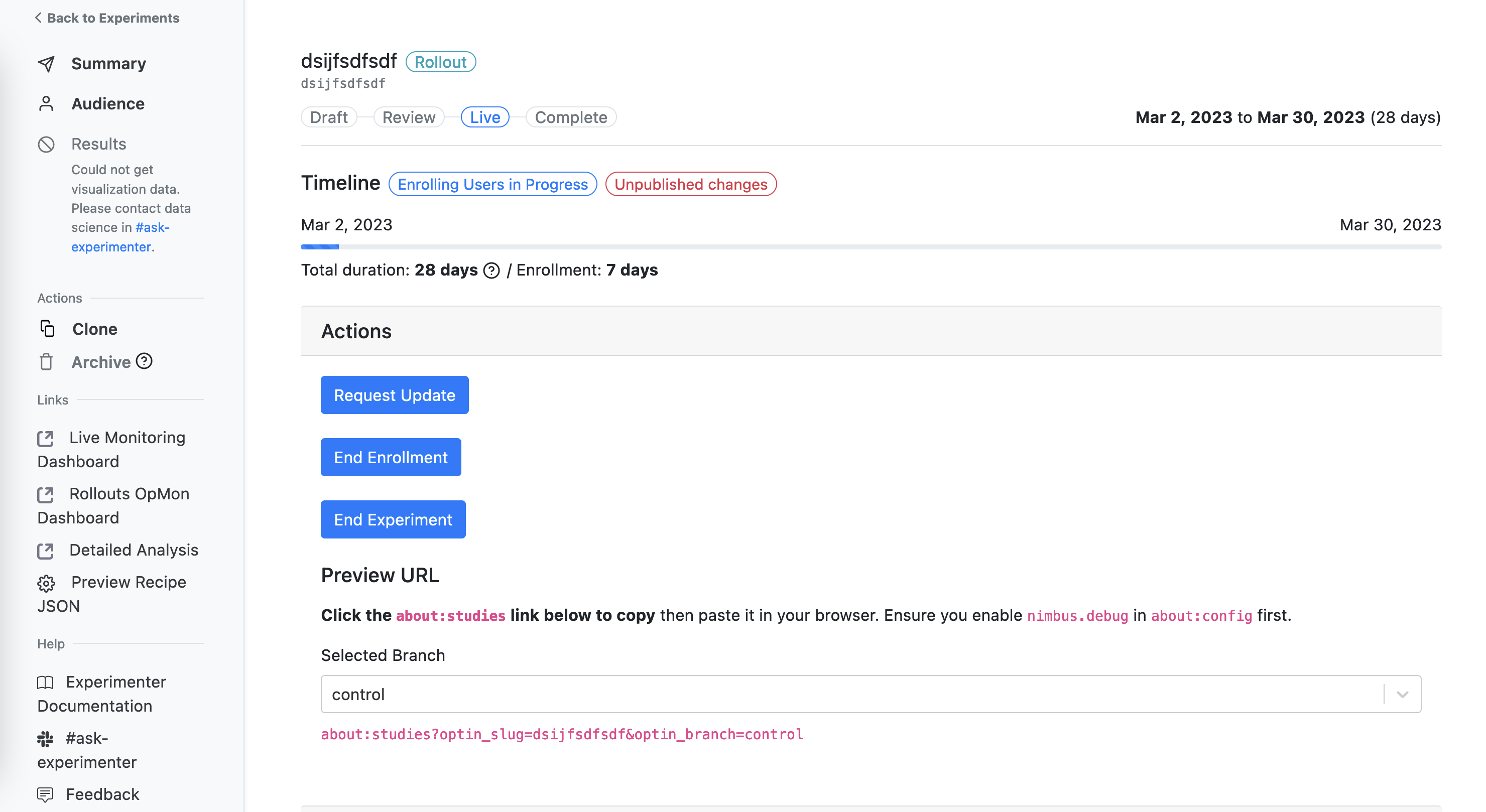

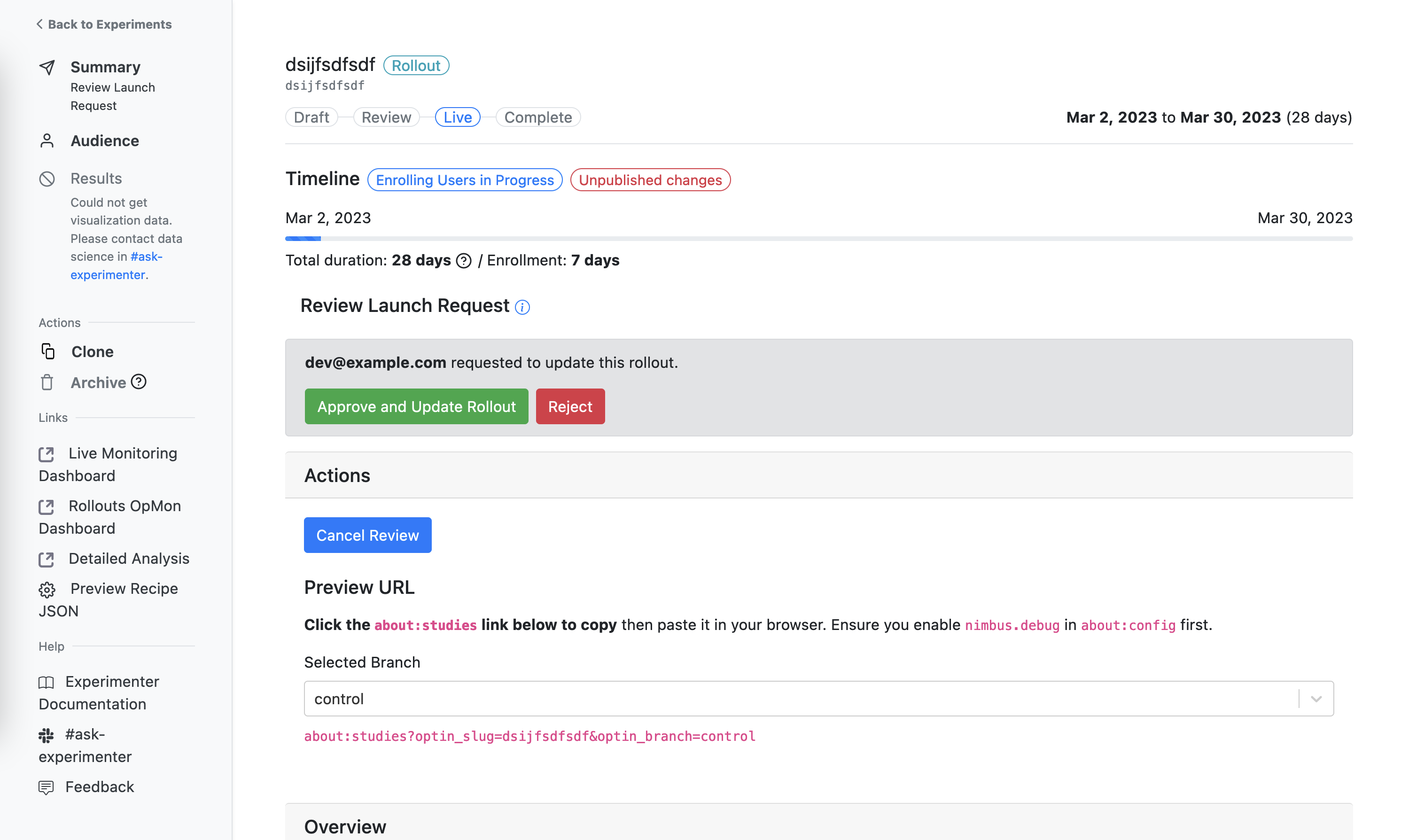

Once an edit has been made, you will see a "Request update" button on the summary page under the available Actions:

Once you request an update, you will follow the same review flow as launching an experiment (approval on Experimenter, and approval on Remote Settings).

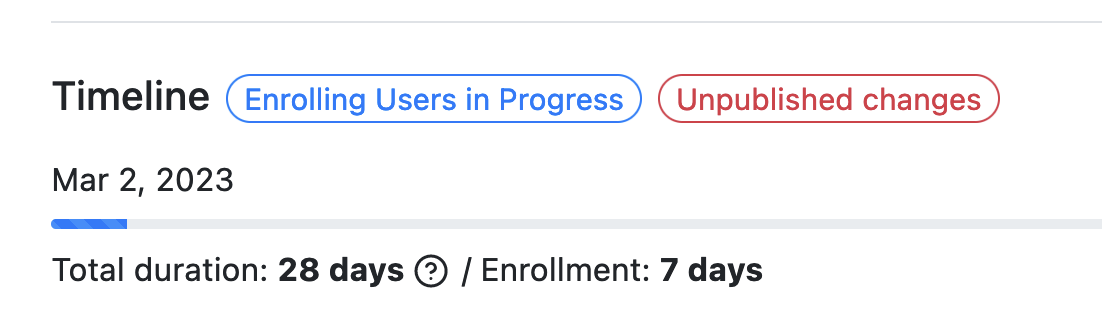

Multiple edits can be made to your rollout without needing to request an update, and you will be able to see if there are unpublished changes by the red "Unpublished changes" status pill that is located by "Timeline":

Multiple edits can be made to your rollout without needing to request an update, and you will be able to see if there are unpublished changes by the red "Unpublished changes" status pill that is located by "Timeline":

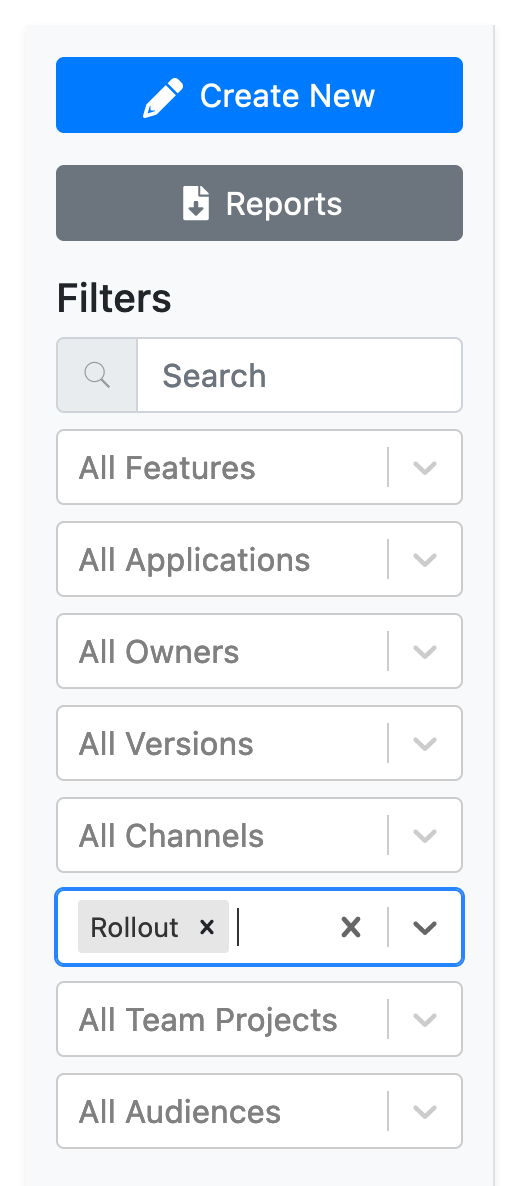

Where can I find rollouts?

This can be done using the filters on the Experimenter home page. You can either sort by feature to see all experiment/rollouts for said feature, or you can search by experiment type to filter by "experiment" or "rollout":

Supported platforms and minimum version targeting

Experimenter currently supports the following platforms:

- Desktop

- Fenix (Firefox for Android)

- Focus Android

- Firefox iOS

- Focus iOS

The minimum version supported for rollouts on all platforms (listed above) is currently 105. See Experimenter for more details.

Automated analysis

- The dashboards for rollouts that OpMon generates follow this URL pattern:

https://mozilla.cloud.looker.com/dashboards/operational_monitoring::<slug with underscores>